RAG Med Chat

An intelligent medical assistant that analyzes uploaded health reports, retrieves relevant medical insights, and answers user queries in real-time using Gemini AI, Hugging Face Embeddings, and Pinecone Vector Database.

Overview

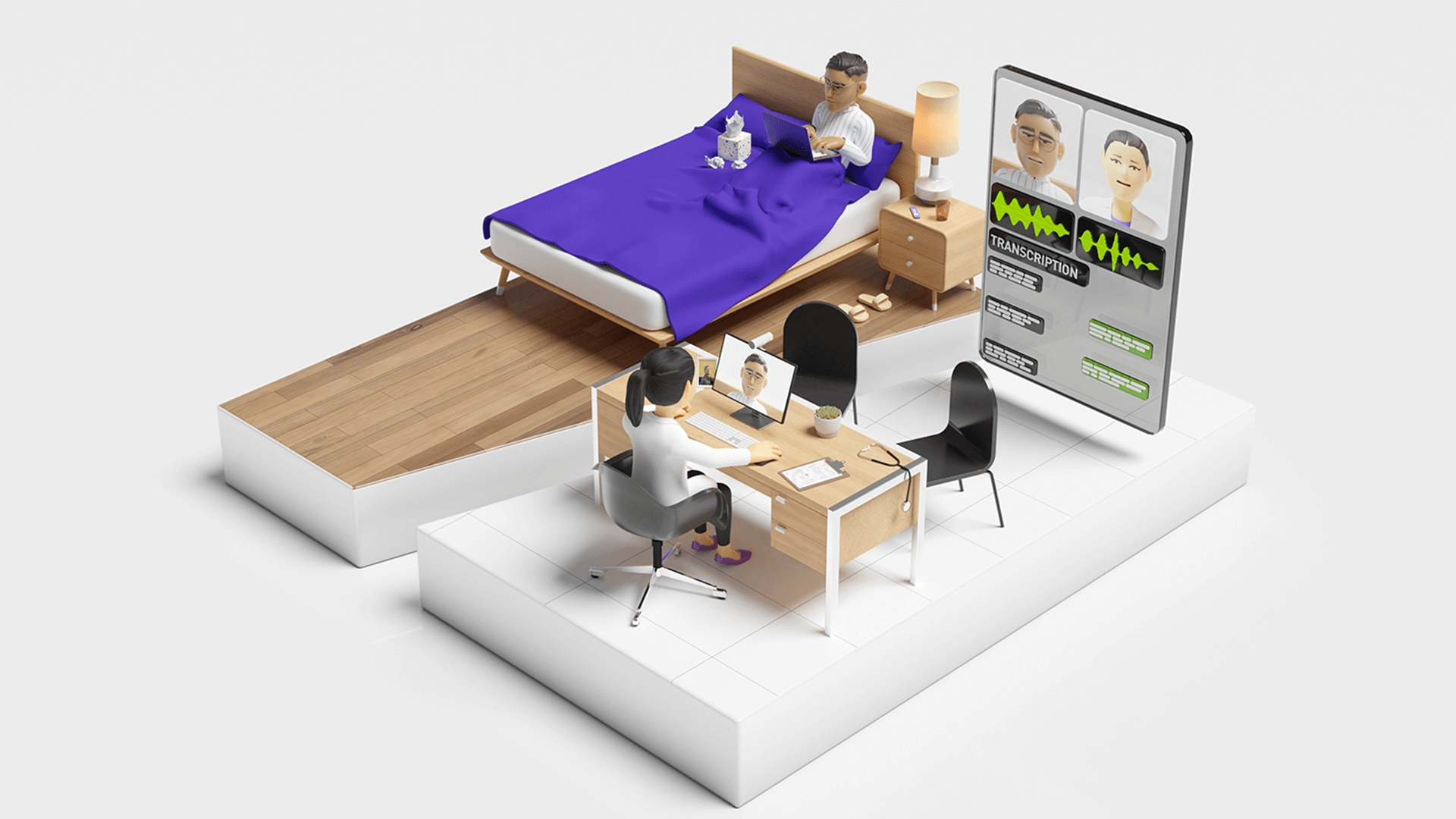

The AI-Powered Medical Report Chat Assistant is a next-generation healthcare support system built with Next.js 14, Vercel AI SDK, and Google’s Gemini AI. Users can upload medical reports (like CBC, lipid profile, etc.), and the system extracts key information using Gemini Vision, stores relevant clinical knowledge in Pinecone, and uses a Retrieval-Augmented Generation (RAG) pipeline to answer patient queries intelligently. This project demonstrates the seamless integration of AI-driven document understanding, semantic search, and streaming LLM responses — all wrapped in an elegant, real-time chat interface. It bridges the gap between static lab reports and dynamic, AI-assisted explanations.

Problem Statement

Patients and even medical professionals often struggle to interpret complex health reports. Traditional report summaries are static and lack contextual explanations. There’s no interactive way to ask questions like: “What does my hemoglobin level mean?” “Should I be concerned about low platelets?” “What should I ask my doctor next?” The challenge was to build an intelligent system that could: Understand medical report data from images or PDFs. Retrieve relevant medical knowledge dynamically. Generate safe, human-like explanations with live streaming respo

Solution

The solution integrates a Retrieval-Augmented Generation (RAG) pipeline with Gemini AI to create a conversational medical assistant. Key steps: Report Upload & Extraction – Users upload a PDF or image. Gemini Vision extracts structured text data. Knowledge Retrieval – The extracted text and user’s question are converted to embeddings using Hugging Face API, then queried against a Pinecone Vector Store containing pre-encoded medical knowledge. Prompt Augmentation – The retrieved clinical findings are injected into a crafted prompt for Gemini, enhancing factual accuracy. Streaming AI Response – Using Vercel AI SDK, Gemini streams the response token by token to the chat UI. Markdown Rendering – Responses are rendered using Markdown with DOMPurify sanitization for secure and rich text formatting. This enables users to chat naturally with their medical data, with AI providing contextual, medically-aware answers.

Tech Stack

- Next.js 14

- TypeScript

- Tailwind CSS

- ShadCN UI

- Vercel AI SDK

- Google Gemini 1.5 Pro

- Gemini Vision API

- Pinecone Vector Database

- Hugging Face Inference API

- Markdown-It

- DOMPurify

- React Hook Form

- Zustand

- Node.js

- Vercel Hosting

Challenges & Learnings

Designing a safe and precise prompt for Gemini to prevent hallucinations while providing relevant insights. Building an efficient RAG pipeline that merges real report data with retrieved medical findings. Managing streaming LLM responses in real-time with proper state synchronization in the chat UI. Handling markdown conversion and XSS sanitization securely for untrusted AI output. Integrating multiple APIs (Gemini, Hugging Face, Pinecone) in a serverless architecture without latency bottlenecks.

Deep understanding of LLM streaming pipelines using Vercel’s AI SDK. Practical implementation of RAG systems (Retrieval-Augmented Generation). Integration of multi-modal AI (text + vision) with Gemini APIs. Experience with secure front-end rendering of dynamic AI-generated markdown. Reinforced best practices for error handling, prompt design, and state management in real-time AI apps.